When it came down to technical skill and ability of our teams, Derivatives Connect is far superior.

Large, agile development and operations (DevOps) capabilities. Strong enterprise application, in particular SAP, capabilities including proprietary tools..

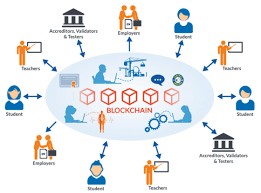

A blockchain is a digitized, decentralized, public ledger of all cryptocurrency transactions. Constantly growing as ‘completed’ blocks (the most recent transactions) are recorded and added to it in chronological order, it allows market participants to keep track of digital currency transactions without central recordkeeping. Each node (a computer connected to the network) gets a copy of the blockchain, which is downloaded automatically.

Originally developed as the accounting method for the virtual currency Bitcoin, blockchains – which use what's known as distributed ledger technology (DLT) – are appearing in a variety of commercial applications today. Currently, the technology is primarily used to verify transactions, within digital currencies though it is possible to digitize, code and insert practically any document into the blockchain. Doing so creates an indelible record that cannot be changed; furthermore, the record’s authenticity can be verified by the entire community using the blockchain instead of a single centralized authority.

Machine learning is an application of artificial intelligence (AI) that provides systems the ability to automatically learn and improve from experience without being explicitly programmed. Machine learning focuses on the development of computer programs that can access data and use it learn for themselves.

The process of learning begins with observations or data, such as examples, direct experience, or instruction, in order to look for patterns in data and make better decisions in the future based on the examples that we provide. The primary aim is to allow the computers learn automatically without human intervention or assistance and adjust actions accordingly.

Microsoft Azure is a platform as a service (PaaS) solution for building and hosting solutions using Microsoft’s products and in their data centers. It is a comprehensive suite of cloud products that allow users to create enterprise-class applications without having to build out their own infrastructure. The Azure Service Platform is comprised of three cloud centric products: Windows Azure, SQL Azure and Azure App Fabric controller. These are in addition to the application hosting infrastructure facility.

Static websites deliver HTML, JavaScript, images, videos and other files to your website visitors, and contain no application code. They are best for sites with few authors and relatively infrequent content changes, typically personal and simple marketing websites. Static websites are very low cost, provide high-levels of reliability, require almost no IT administration, and scale to handle enterprise-level traffic with no additional work. Build & Launch a fault

Enterprise Resource Planning (ERP) is a software that is built to organizations belonging to different industrial sectors, regardless of their size and strength.

The ERP package is designed to support and integrate almost every functional area of a business process such as procurement of goods and services, sale and distribution, finance, accountings, human resource, manufacturing, production planning, logistics & warehouse management.

Every business, regardless of the industry they belong to, require connected systems with efficient information flow from one business process to another. Business Process Integration (BPI) plays an important role in overcoming integrating challenges that allows organizations to connect systems internally and externally.

Big data refers to a process that is used when traditional data mining and handling techniques cannot uncover the insights and meaning of the underlying data. Data that is unstructured or time sensitive or simply very large cannot be processed by relational database engines. This type of data requires a different processing approach called big data, which uses massive parallelism on readily-available hardware.

Big data means really a big data, it is a collection of large datasets that cannot be processed using traditional computing techniques. Big data is not merely a data, rather it has become a complete subject, which involves various tools, technqiues and frameworks.

Hadoop is an Apache open source framework written in java that allows distributed processing of large datasets across clusters of computers using simple programming models.

Hadoop Common: These are Java libraries and utilities required by other Hadoop modules. These libraries provides filesystem and OS level abstractions and contains the necessary Java files and scripts required to start Hadoop.

The ability to provision Azure Cosmos DB throughput at the database level could come with pricing sticker shock for some users of Microsoft's multimodel database.

Microsoft continues to add Azure Cosmos DB features, including a capability to provision database throughput that creates new pricing considerations for users of the multimodel distributed cloud database.

GPU databases offer a new way to process data. 451 Research analyst James Curtis discusses where they fit in big data applications, particularly for parallel processing.

New data technologies are continually arriving these days. Among the latest entrants are GPU databases.

DevOps is the blending of tasks performed by a company's application development and systems operations teams. The term DevOps is being used in several ways. In its most broad meaning, DevOps is an operational philosophy that promotes better communication between development and operations as more elements of operations become programmable. In its most narrow interpretation, DevOps describes the part of an organization’s information technology (IT) team that creates and maintains infrastructure.

DevOps comes into existence considering one of the important benefits of Agile Software development method which enables the organization to release the product quickly. But further additions of more processes differentiate DevOps from Agile.

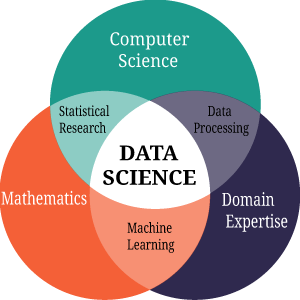

Data for a Data Scientist is what Oxygen is to Human Beings. This is also a profession where statistical adroit works on data – incepting from Data Collection to Data Cleansing to Data Mining to Statistical Analysis and right through Forecasting, Predictive modelling and finally Data Optimization. A Data Scientist does not provide a solution; they provide most optimized solution out of the many available.

Data science is an interdisciplinary field that uses scientific methods, processes, algorithms and systems to extract knowledge and insights from data in various forms, both structured and unstructured..

Data analytics refers to qualitative and quantitative techniques and processes used to enhance productivity and business gain. Data is extracted and categorized to identify and analyze behavioral data and patterns, and techniques vary according to organizational requirements. Data analytics is also known as data analysis.

MPP (massively parallel processing) is the coordinated processing of a program by multiple processor s that work on different parts of the program, with each processor using its own operating system and memory . Typically, MPP processors communicate using some messaging interface. In some implementations, up to 200 or more processors can work on the same application. An "interconnect" arrangement of data paths allows messages to be sent between processors. Typically, the setup for MPP is more complicated, requiring thought about how to partition a common database among processors and how to assign work among the processors. An MPP system is also known as a "loosely coupled" or "shared nothing" system.

A relational database management system (RDBMS) is a collection of programs and capabilities that enable IT teams and others to create, update, administer and otherwise interact with a relational database. Most commercial RDBMSes use Structured Query Language (SQL) to access the database, although SQL was invented after the initial development of the relational model and is not necessary for its use.

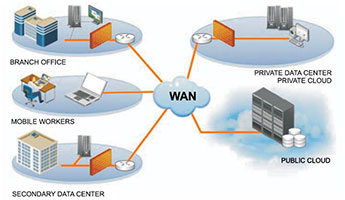

Network infrastructure is the hardware and software resources of an entire network that enable network connectivity, communication, operations and management of an enterprise network.

Networking refers to the total process of creating and using computer networks, with respect to hardware, protocols and software, including wired and wireless technology. It involves the application of theories from different technological fields, like IT, computer science and computer/electrical engineering.

Copyright © 2020 Derivatives Connect Pvt Ltd. All rights reserved.